A realistic comparison to understand the actual energy appetite of AI Chatbots

Every time you ask ChatGPT to “explain quantum physics like I’m five”, or ask Google Veo 3 to generate a realistic video of yourself floating in a spaceship, somewhere in the world, a massive rack of GPUs sighs, rolls up its silicon sleeves, and gets to work while sucking up enough electricity to make your Dyson vacuum cleaner jealous. But have you ever wondered how much energy these seemingly simple prompts consume? Let us put things into perspective.

AI AND ENERGY

Sumedh Joshi

8/19/20253 min read

A home office laptop used for web browsing for 8 hours a day consumes around 400Wh/day (~50Wh X 8 hr). This implies a yearly consumption of 146 kWh/year, considering we are always excited to work on the weekends. With a realistic yearly consumption of 120 kWh, this activity accounts for approximately 1% of the average household energy consumption in the US (10,500 kWh/year). Putting it into an EV enthusiast’s perspective, 120 kWh is how much energy a Tesla Model 3 would require for an NYC to Washington DC round trip, still having some charge left to grab Starbucks and find a Tesla Supercharger up north into Connecticut.

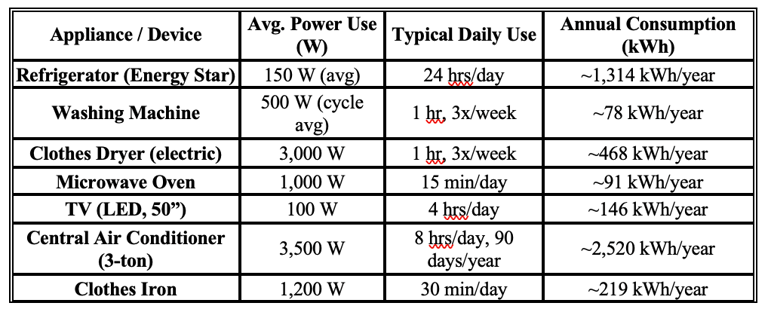

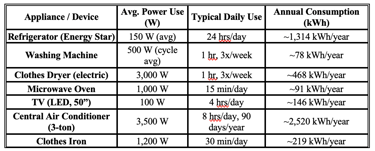

The table below summarizes the energy consumption of some of the most common household appliances.

Now that we have the basis of our energy consumption scale perspective set, we shall now see how much energy AI prompts consume.

Global data centers consume about 415 terawatt-hours of electricity per year. That’s 1.5% of the global energy generation capacity. By 2030, the International Energy Agency projects this could double to nearly 945 TWh, pushing close to 3% of the world’s total demand. In the U.S. alone, data centers already consume over 4% of national electricity. AI accounts for 20% of this 415 TW/year consumption as of 2024, with energy forecasts hinting towards a massive growth in AI infrastructure that would increase this 20% share to 50% by the end of 2025!

To understand where exactly AI datacenters consume power, we will look at the AI query workflow: from prompts to tokenization to automated responses.

Step 1 – User prompt enters the system.

Process: You type your question and hit Enter. Your device sends the request over the internet to the AI provider’s servers.

Energy consumed: Your device: ~0.001–0.01 Wh (negligible)

Network transmission: ~0.02–0.2 Wh per MB of data (varies by network)

Step 2 - Data center receives the request.

Your prompt hits a load balancer (decides which AI server to send it to). It’s routed to a GPU cluster hosting the model.

Energy consumed: Networking & load balancing: ~0.01–0.05 Wh. Storage systems retrieving model weights: negligible per query.

Step 3 - Model inference

Process: The AI model (e.g., GPT-4o, 5) has billions of parameters stored in GPU memory. For each token generated, the model performs a large matrix multiplication using GPUs (like NVIDIA A100 or H100).

Energy per token: Accounts for 85-90% of energy consumed.

Small models: 0.00002–0.00005 Wh/token.

GPT-3.5-scale: ~0.0001–0.0002 Wh/token.

GPT-4-scale: ~0.002–0.005 Wh/token (depends on hardware efficiency).

A 300-token ChatGPT response (about 250 words) could consume ~ 0.6 Wh, similar to running your laptop for an hour. Multiplying that by millions reveals the humongous appetite of AI

Step 4 – Sending the answer back.

The output tokens are streamed back to your browser/app over the network.

Energy consumed: Networking: ~0.02–0.2 Wh per MB. Your device’s display & CPU: 0.5–2 Wh while reading it (depending on brightness and device type).

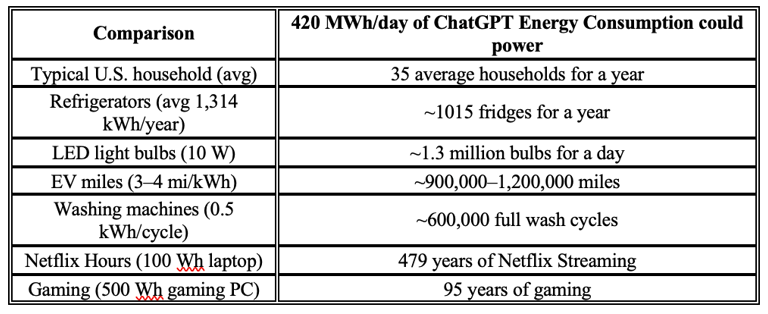

Realistically, if GPT-4o and 5 alone handle 700 million queries a day, that’s about 420 MWh daily. The table below summarizes the equivalent energy use by our daily tasks.

Video generation is a more power-intensive task, estimated to consume 50-100 Wh of energy per 6-second video (average). This is equivalent to 25 smartphone charges or running a 10 W LED bulb for 10 hours. Image generation is no different from the power of avarice. It is estimated that an AI-generated image consumes 1.7 Wh of power; 1000 such images are enough to wash your weeks of piled-up laundry.

This exercise is a good way to know the appetite of AI chatbots.

It should be noted that AI power draw isn’t fixed. Model compression, quantization, and specialized hardware (like NVIDIA’s FP8 Tensor Cores or Google’s TPUs) can reduce energy use per token by 10× or more. Cooling innovations like liquid immersion can push PUE (Power Usage Effectiveness) close to 1.0, meaning almost no overhead.

Still, with demand skyrocketing, efficiency alone may not offset total consumption. As AI continues to scale, the industry faces a choice: make models leaner, power them with clean energy, or watch electricity demand — and carbon emissions — soar. More on the emissions in the next article.

Connect

info@energizetomorrowus.com

© 2025. All rights reserved.