How significant is the Carbon Footprint of AI Chatbots?

In the last article on AI and Energy, we presented a contextualized study to decipher the actual energy appetite of Large Language Models. Since our current electricity grids are not fully decarbonized, every AI chatbot prompt has a carbon footprint. Adding the indirect emissions from data center hardware's lifecycle and supply chain operations reveals the actual carbon emissions associated with running AI models. But how significant is it?

AI AND ENERGY

Sumedh Joshi

9/3/20254 min read

Just recently, Mistral, a French AI frontrunner, published its Large 2 LLM lifecycle emissions. In this one-of-a-kind study, they reported a footprint of 20.4 ktCO₂e or 20.4 thousand tons of carbon dioxide emissions for Large 2, as of January 2025. This study included the workload of training the model and public use for 18 months. To summarize, they reported that a 400 token prompt generates 1.4gCO₂e.

LLMs can be divided into two broad categories. These are Concise LLMs and Reasoning LLMs. Concise LLMs (OpenAI GPT Series) produce brief, information-dense outputs by focusing on key information while minimizing extraneous words, whereas Reasoning LLMs (OpenAI o1, Google Gemini 2.0) generate many extra internal “thinking” tokens and are energy-intensive. An average Concise LLM plays around with 300 tokens, and Reasoning LLMs command 550 tokens. This information can be used to arrive at a rough estimate of energy use-related carbon emissions. For ease of calculations and referring to the data from trusted sources, we shall consider ChatGPT 4.1, 5, o1, and o2-mini models for this study.

Carbon Emissions from Energy Usage

From our last article, considering 700 million queries/day for the GPT 4.1 and 5 models and 400 million queries for the o1 and o2-mini reasoning models, we arrive at a total energy consumption of:

GPT 4.1 and 5: 700 million queries/day × 0.6 Wh/query = 420 MWh/day

GPT o1 and o2-mini: 400 million queries/day × 1.1 Wh/query = 440 MWh/day

That equates to about 860 MWh/day of combined energy consumption. In the US, 44% of the grid is carbon-free [renewables (26%) + nuclear (18%)], and the rest is sourced from natural gas (40%), coal (15%), and other sources (1%). We can arrive at the average grid carbon intensity, which is 0.81 lb CO₂/kWh or 0.374 tCO₂/MWh.

Hence, total carbon emissions per day from the energy consumption of ChatGPT models are 321.64 tonnes CO₂/day.

This figure is based on the US energy grid and is subject to change for other regions.

Carbon Emissions from Data Center Lifecycle and Supply Chain Operations

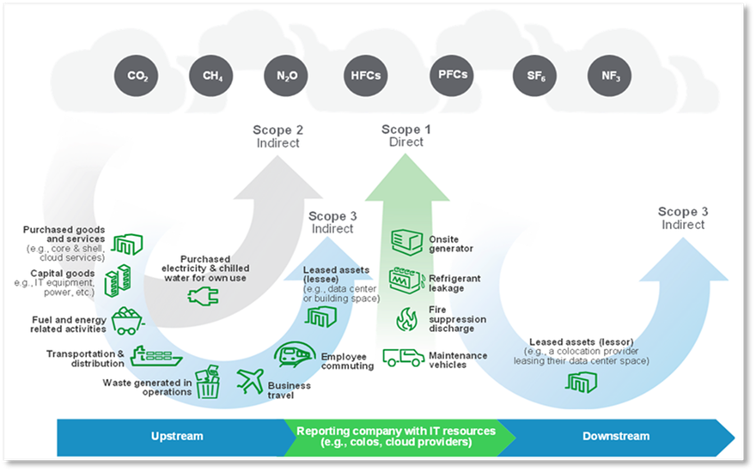

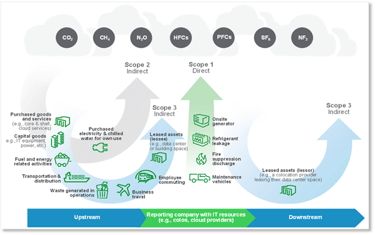

The above figure depicts the lifecycle of an AI datacenter.

Here, Scope 1 includes emissions from the combustion of fossil fuels in furnaces, boilers, and vehicles at the organization, which are minuscule. Scope 2 emissions are related to the consumption of energy, and Scope 3 emissions are related to the transportation and distribution of the products and operations of the organization. A recently published article by Data Center Dynamics reports that Supply Chain and Lifecycle account for 38% of total data center and AI-related emissions. They also report that Scope 3 emissions are the hardest to calculate, owing to meager supplier data, lack of quantitative tools, and non-standardized accounting and reporting practices.

For this study, we assume that 38% of the total data center carbon emissions are Scope 3 emissions. Given that Scope 2 emissions, which we have already calculated, are 62%, the Scope 3 emissions can be calculated as follows –

Scope 3 (lifecycle and supply chain) emissions: (38% × 321.64 tCO₂/day × 365 days) / (62%) = 0.071 MtCO₂/year

We can calculate the total ChatGPT emissions by adding Scope 1, 2, and 3 emissions.

Total ChatGPT AI emissions/year = 0.189 MtCO₂

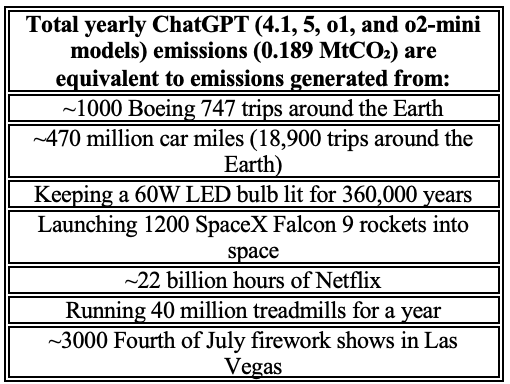

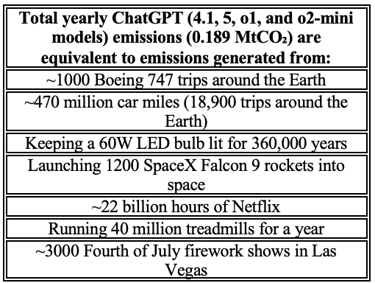

0.189 MtCO₂/year of emissions is equivalent to the activities tabulated in Table 1.

It should be noted that 0.189 MtCO₂/year is tied to Scope 2 and 3 emissions for running the public versions of the ChatGPT models mentioned before. This figure does not represent the emissions generated during model training, which requires a deeper understanding of the training protocol and methodologies, rarely disclosed publicly. For more complex models used for image and video generation, the emissions for a query are 10-15x due to larger inference processes.

To put things into context, the total vehicle miles driven worldwide yearly is 11.3 trillion. The yearly ChatGPT emissions calculated are equivalent to a minuscule 470 million vehicle miles. Even if this is the case, certain measures are necessary to further reduce data center emissions.

As of 2025, AI datacenters are responsible for 0.5% of global GHG emissions, and this number is bound to increase to 1% by 2030, owing to increasing AI datacenter CAPEX and the overall demand for AI. Some measures to reduce emissions from the operations of the datacenters are –

Increasing the use of decarbonized energy, especially nuclear energy. Dependence on point-source renewable energy sources will heavily decrease emissions.

Integration of sustainable supply chain methods, like the use of fuel-efficient freight, vehicles, and airplanes, and the reduction of waste and promotion of recycling of materials (circular construction).

Designing energy-efficient AI algorithms.

Building energy-efficient modular data center designs that have high energy density and are way more efficient than traditional data centers.

Building high PUE (Power Usage Effectiveness) data center designs that save energy, costs, and material requirements

Ensuring a strict adherence to data center emissions policies that regulate the emissions and power used by a data center (EU Energy Efficiency Directive, US Federal and State Climate Disclosure rules, and Climate Corporate Data Accountability Act)

The path to a decarbonized AI future is a difficult one. Nevertheless, a symbiotic relationship among governments, organizations, and the scientific community is critical and has the potential to overcome this challenge.

Table 1. Emission comparison study

Connect

info@energizetomorrowus.com

© 2025. All rights reserved.